On this post you will see what orthogonal matrices are and their relation with the inverse of a matrix. You will also see several examples and the formula that verifies every orthogonal matrix, with which you will know how to find one quickly. Finally, you will find the properties and applications of these peculiar matrices together with a typical solved exam exercise.

Table of Contents

What is an orthogonal matrix?

The definition of orthogonal matrix is as follows:

An orthogonal matrix is a square matrix with real numbers that multiplied by its transpose is equal to the Identity matrix. That is, the following condition is met:

Where A is an orthogonal matrix and AT is its transpose.

For this condition to be fulfilled, the columns and rows of an orthogonal matrix must be orthogonal unit vectors, in other words, they must form an orthonormal basis. That is why some mathematicians also call them orthonormal matrices.

Inverse of an orthogonal matrix

Another way to explain the concept of an orthogonal matrix is by means of the inverse matrix, because the transpose of an orthogonal matrix is equal to its inverse.

To fully understand this theorem, it is important to know how to invert a matrix. On this link you will find a detailed explanation of the inverse of a matrix, all its properties, and step by step solved exercises.

It is easy to prove that the inverse of an orthogonal matrix is equivalent to its transpose using the orthogonal matrix condition and the main property of inverse matrices:

Thus, an orthogonal matrix will always be an invertible or non-degenerate matrix. See properties of invertible matrix.

Examples of orthogonal matrices

Next we are going to see several examples of orthogonal matrices to fully understand its meaning.

Example of a 2×2 orthogonal matrix

The following matrix is a 2×2 dimension orthogonal matrix:

We can check that it is orthogonal by calculating the product by its transpose:

As the result gives the unit matrix, it is checked that A is an orthogonal matrix.

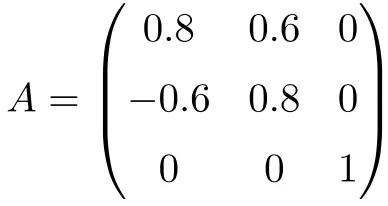

Example of a 3×3 orthogonal matrix

The following matrix is an orthogonal matrix of order 3:

It can be shown that it is orthogonal by multiplying matrix A by its transpose:

The product results in the Identity matrix, therefore, A is an orthogonal matrix.

Formula to find a 2×2 orthogonal matrix

Now we are going to see the proof that all orthogonal matrices of order 2 follow the same pattern, furthermore, we are going to deduce how to find a 2×2 orthogonal matrix with a simple formula.

Let A be a generic 2×2 matrix:

For this matrix to be orthogonal, the following matrix equation must be satisfied:

Solving the matrix multiplication we obtain the following equations:

If we look closely, these equalities are very similar to the fundamental Pythagorean trigonometric identity:

Therefore, the terms that satisfy equations (1) and (3) are:

In addition, substituting the values in the second equation we obtain the relation between both angles:

That is, one of the following two conditions must be met:

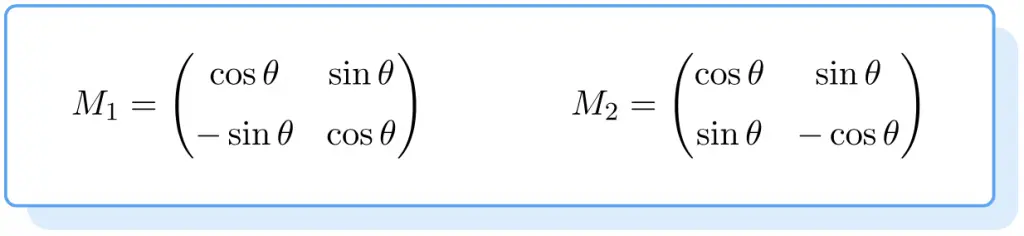

So, in conclusion, orthogonal matrices must have the structure of one of the following two matrices:

Where is a real number.

In fact, if as an example we give the value of and take the first matrix form, we will obtain the matrix that we have checked to be orthogonal above in the section “Example of a 2×2 orthogonal matrix”:

Properties of an orthogonal matrix

The characteristics of this type of matrix are:

- An orthogonal matrix can never be a singular matrix, since it can always be inverted. In this regard, the inverse of an orthogonal matrix is another orthogonal matrix.

- Any orthogonal matrix can be diagonalized. So, orthogonal matrices are orthogonally diagonalizable.

See: how to perform matrix diagonalization.

- All the eigenvalues of an orthogonal matrix have modulus 1.

See: how to calculate the eigenvalues of a matrix.

- Any orthogonal matrix with only real numbers is also a normal matrix.

- The analog of the orthogonal matrix in a complex number field is the unitary matrix.

- Obviously, the identity matrix is an orthogonal matrix.

See definition of identity matrix.

- The set of orthogonal matrices of dimension n×n together with the operation of the matrix product is a group called the orthogonal group. That is, the product of two orthogonal matrices is equal to another orthogonal matrix.

- Furthermore, the result of multiplying an orthogonal matrix by its transpose can be expressed using the Kronecker delta:

- Finally, the determinant of an orthogonal matrix is always equal to +1 or -1.

Solved exercise of orthogonal matrices

Next we are going to solve an exercise of orthogonal matrices.

- Given the following square matrix of order 3, find the values of

and

so that the matrix is orthogonal:

In order to satisfy the orthogonality of the matrix, the product of the matrix and its transpose must be equal to the identity matrix. Thus:

We solve the matrix multiplication:

We can get an equation for the upper left corner of the matrices, because the elements located in that position have to coincide. So:

We solve the equation:

However, there are equations that do not hold for the positive solution, for example the one in the upper right corner. So only the negative solution is feasible.

On the other hand, to calculate the variable we can match, for example, the terms placed in the second row of the first column:

Substituting the value of in the equation:

Ultimately, the only possible solution is:

So the orthogonal matrix that has those values is:

Applications of orthogonal matrices

Although it may not seem like it, orthogonal matrices are very important in mathematics, especially in the field of linear algebra.

In geometry, orthogonal matrices represent isometric transformations that do not modify distances and angles in real vector spaces, for that reason they are called orthogonal transformations. Furthermore, these transformations are internal isomorphisms of such vector space. These transformations can be rotations, specular reflections, or inversions.

Finally, this type of matrix is also used in physics, since it allows studying the motion of rigid bodies. And they are even used in the formulation of certain field theories.